Using Photographs to Enhance Videos of a Static Scene

Pravin Bhat, C. Lawrence Zitnick, Noah Snavely, Aseem Agarwala, Maneesh Agrawala, Brian Curless, Michael Cohen, Sing Bing Kang

Abstract

We present a framework for automatically enhancing videos of a static scene using a few photographs of the same scene. For example, our system can transfer photographic qualities such as high resolution, high dynamic range and better lighting from the photographs to the video. Additionally, the user can quickly modify the video by editing only a few still images of the scene. Finally, our system allows a user to remove unwanted objects and camera shake from the video. These capabilities are enabled by two technical contributions presented in this paper. First, we make several improvements to a state-of-the-art multiview stereo algorithm in order to compute view-dependent depths using video, photographs, and structure-from-motion data. Second, we present a novel image-based rendering algorithm that can re-render the input video using the appearance of the photographs while preserving certain temporal dynamics such as specularities and dynamic scene lighting.

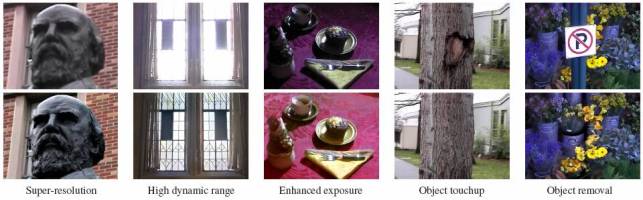

Some of the video enhancements produced by our system. Given a low quality video of a static scene (top row) and a few high quality photographs of the scene, our system can automatically produce a variety of video enhancements (bottom row). Enhancements include the transfer of photographic qualities such as high resolution, high dynamic range, and better exposure from photographs to video. The video can also be edited in a variety of ways (e.g., object touchup, object removal) by simply editing a few photographs or video frames.