Identifying Redundancy and Exposing Provenance in Crowdsourced Data Analysis

Wesley Willett, Shiry Ginosar, Avital Steinitz, Björn Hartmann, Maneesh Agrawala

Abstract

We present a system that lets analysts use paid crowd workers to explore data sets and helps analysts interactively examine and build upon workers' insights. We take advantage of the fact that, for many types of data, independent crowd workers can readily perform basic analysis tasks like examining views and generating explanations for trends and patterns. However, workers operating in parallel can often generate redundant explanations. Moreover, because workers have different competencies and domain knowledge, some responses are likely to be more plausible than others. To efficiently utilize the crowd's work, analysts must be able to quickly identify and consolidate redundant responses and determine which explanations are the most plausible. In this paper, we demonstrate several crowd-assisted techniques to help analysts make better use of crowdsourced explanations: (1) We explore crowd-assisted strategies that utilize multiple workers to detect redundant explanations. We introduce color clustering with representative selection--a strategy in which multiple workers cluster explanations and we automatically select the most-representative result--and show that it generates clusterings that are as good as those produced by experts. (2) We capture explanation provenance by introducing highlighting tasks and capturing workers' browsing behavior via an embedded web browser, and refine that provenance information via source-review tasks. We expose this information in an explanation-management interface that allows analysts to interactively filter and sort responses, select the most plausible explanations, and decide which to explore further.

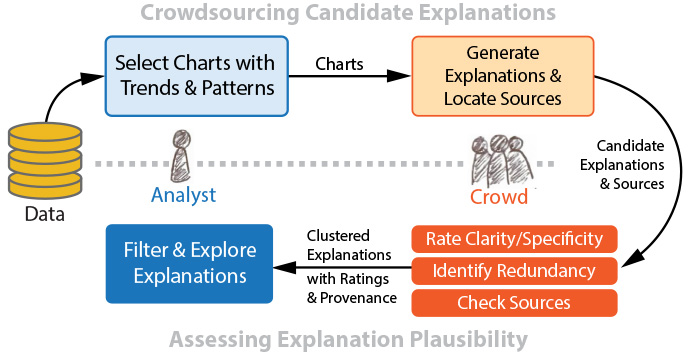

We demonstrate several crowd-assisted techniques for generating and managing possible explanations for trends and patterns in datasets. In this workflow, an analyst automatically generates charts and workers provide explanations. Other workers then assess explanation plausibility by rating explanations, identifying redundancy, and checking sources. Analysts can then interactively explore the results. This paper focuses on the latter half of the workflow.